Operating System: Xv6 on RISC-V - Part 2

Published:

Operating System: Xv6 on RISC-V - Syscall & Address translation

System Call in XV6

System Call are the APIs for the user space to interact with the kernel space, access resources and hardware without knowing low-level details.

About System Call

- Allow processes to interact with the kernel by transitioning from user mode to kernel mode.

- Allow protected access from applications to memory, I/O devices and other protected subsystems

- Perform read/write files, create processes, allocate memory etc.

- Invoked by API functions in glibc like printf(), exit(), open() etc which trigger a context switch to kernel mode.

- When the OS service completes, it returns to user mode where the process resumes.

- Each system call has a unique number that identifies to the kernel which service is being requested.

Trap into the kernel

- System classs(Planned): User -> Kernel

- SYSCALL for I/O, memory allocation, process creation etc.

- Exception(error): User -> Kernel

- Page fault, divide by zero, invalid instruction etc.

- Interrupt(event): Device -> Kernel

- Keyboard event, network packet, timer ticks, hardward events, etc.

System Call Implementation

- Add an entry for the new system call to the syscall array in the

syscall.cfile. - write the implementation of the system call in the

sysproc.cfile. - add function prototype in the

syscall.cfile. e.g.,extern int sys_hello(void); - define the system call in the

syscall.hfile. e.g.,#define SYS_hello 22 - create an interface in the

usys.plfile. e.g.,entry(hello), allow the user to call the system call. - add the system call to the

user.hfile. e.g.,int hello(void);

RISC-V Calling Convention

RISC-V uses ecall to invoke system calls

- a0-a6: syscall arguments(up to 7)

- a7: syscall number

- a0(after ecall): return value

Address Translation

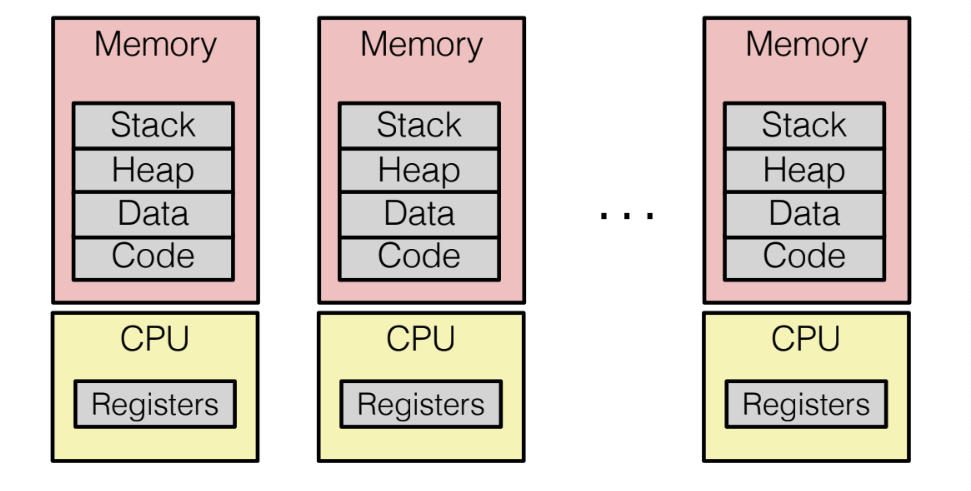

Computer runs multiple processes at the same time, each process has its own memory and CPU state (address space). Cannot see the state of each other.

Physical Address

The main memory of a computer is organized as a array of M contiguous byte-size cells. When the CPU wants to access a memory location, it sends the address to the memory controller(bus), which reads the data from the memory location and sends it back to the CPU, and stores it in a register.

Virtual Address

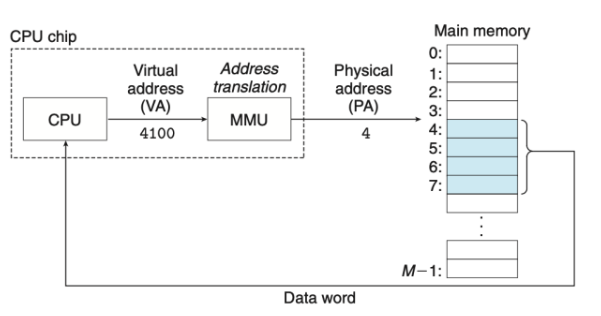

- The CPU accesses main memory by generating a virtual address, which is converted to the appropriate physical address before being sent to main memory.

- Address translation converts a virtual address to a physical address.

- Like exception handling, address translation requires cooperation between the CPU and the OS.

- Dedicated hardware on the CPU chip (MMU) translates virtual addresses on the fly, using a lookup table stored in main memory whose contents are managed by the operating system.

Physical VS Virtual Address

- Physical Address Space: address space supported by the hardware; Starts at address 0, and ends and the address MAXsys

- Virtual Address Space: address space view of a process; Starts at address 0, going to address MAXprog

Address Spaces

- An address space is an ordered set of nonnegative integer addresses {0,1,2,…}

- In a system with virtual memory, the CPU generates virtual addresses from an address space of N = \(2^n\) addresses called the virtual address space: {0,1,2,…,N−1}

- The size of an address space is characterized by the number of bits that are needed to represent the largest address.

- e.g., a virtual address space with N = \(2^n\) addresses is called an n-bit address space.

- Modern systems typically support either 32-bit or 64-bit virtual address spaces.

Simple Memory Management

- Allocate a partition when a process is admitted into the system

- Allocate a contiguous memory partition to the process

The OS Keeps track of of:

Full-blocks

Empty-blocks(“holes”)

Linux Address Space

Processes are amnaged by a shared kernel space.

The kernel is not a separate process, but rather runs as part of a user process.

In x86-64 Linux:

- Kernel: shared space with shared memory addresses

- User space: Even the same memory addresses point to different data in different processes

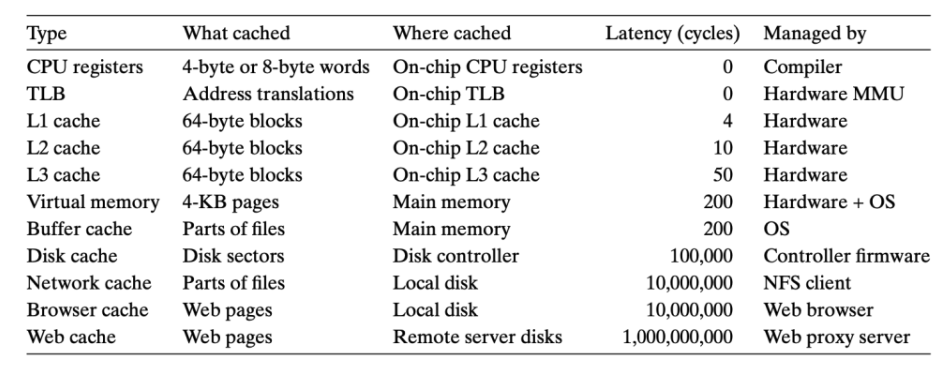

Caching in modern computer systems:

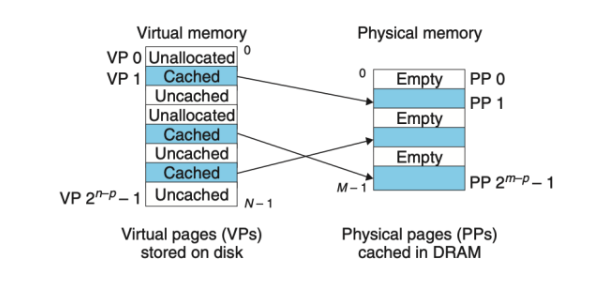

VM as a Tool for Caching

- Virtual memory is organized as an array of N contiguous bytes stored on disk. Each byte has a unique virtual address that serves as an index into the array.

- The contents of the array on disk are cached in main memory

- The data on disk is partitioned into blocks that serve as the transfer units between the disk and the main memory.

- VM systems handle this by partioning the vetual memory into fixed-size blocks called virtual pages.

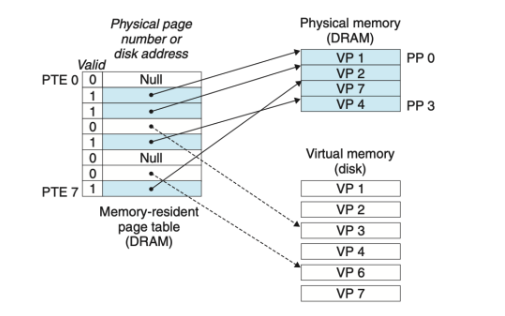

Page Table

- A page table is a data structure used in virtual memory systems to store mapping infomation between virtual addresses and physical addresses in computer memory.

- Page tables are stored in main memory

- The virtual memory uses the PT to determine if a virutal page is cached in DRAM.

If so, the system finds which physical page it is cached in. Otherwise its finds where the virtual page is stored on disk, select a victim page in physcial memory, and copy the virtual page from disk to DRAM, replacing the victim page.

- Each page in the virtual address space has a PTE at a fixed offset in the page table.

- Each PTE consists of a valid bit and an n-bit address field. The valid bit indicates whether the virtual page is currently cached in DRAM.

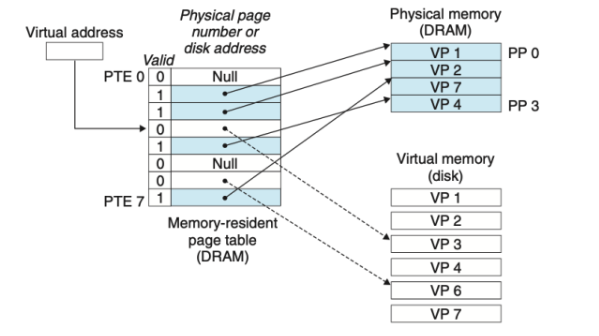

Page Fault

A page fault occurs when a program accesses a page that is not currently in memory.

- The page fault handler selects VP4 as the victim and replaces it with a copy of VP3 from disk.

- If VP4 has been modified, then the kernel copies it back to disk.

- After the page fault handler restarts the faulting instruction, it will read the word from memory normally, without generating an exception.

Process Address Space

- The Operating System provide a separate page table, and thus a separate virtual address space, for each process.

- Notice that multiple virtual pages can be mapped to the same shared physical page.

Memory Protection

- A user process should not modify its read-only code section. Nor should it be allowed to read or modify any of the code and data structures in the kernel.

- Each PTE has three permission bits. The SUP bit indicates whether processes must be running in kernel (supervisor) mode to access the page.

- Processes running in kernel mode can access any page

- Processes running in user mode are only allowed to access pages with SUP is 0.

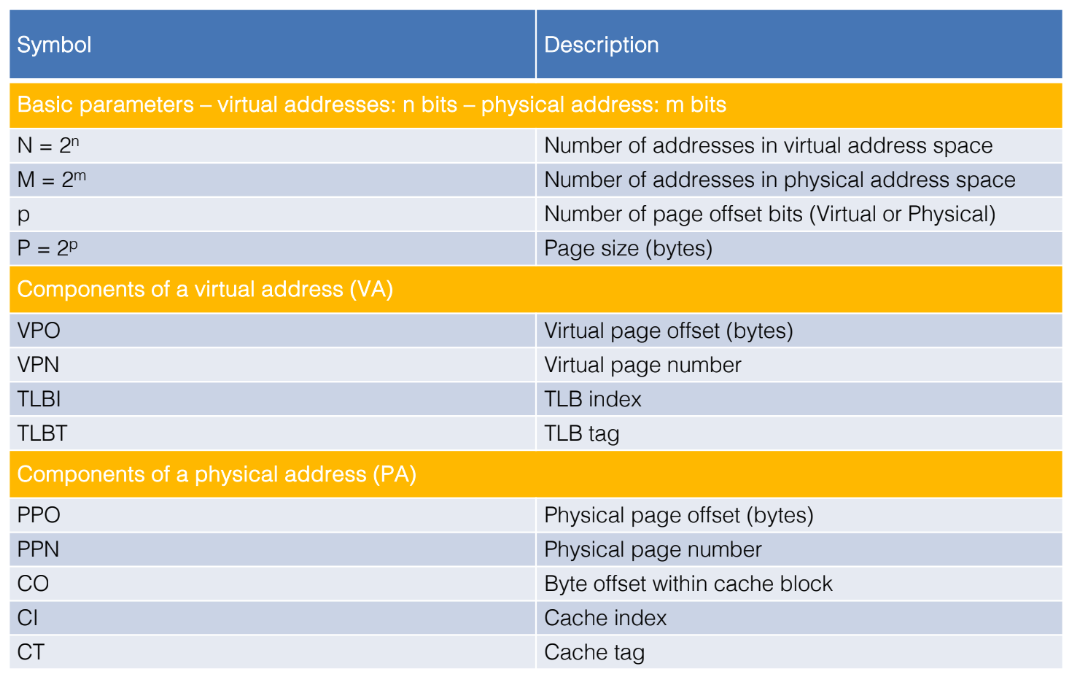

Address Translation Symbols

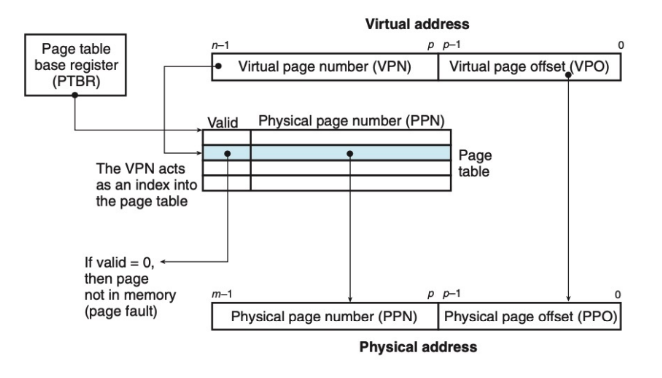

Address Translation With a Page Table

- The processor generates a virtual address and sends it to the MMU.

- The MMU generates the PTE address and requests it from the cache/main memory.

- The cache/main memory returns the PTE to the MMU.

- The MMU uses the VPN to select the appropriate PTE. e.g. VPN 0 selects PTE 0, VPN 1 selects PTE 1 etc.

- The MMU constructs the physical address and sends it to the cache/main memory.

- The cache/main memory returns the requested data word to the processor.

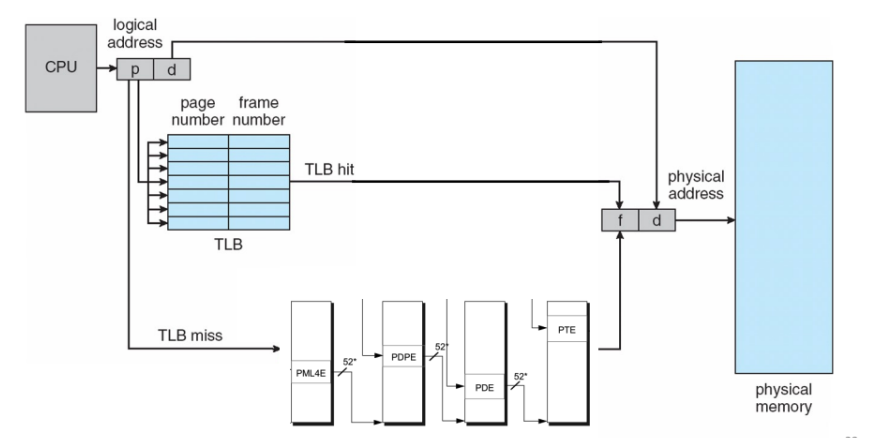

Speeding Up Address Translation

Problem:

Every time the CPU generates a virtual address, the MMU must refer to a PTE in order to translate the virtual address into a physical address. This requires an additional fetch from memory, at a cost of tens to hundreds of cycles. Solution:

Include a small cache of PTEs in the MMU called a Translation Lookaside Buffer(TLB). The TLB is a small, virtually addressed cache where each line holds a block consisting of a single PTE.

When using the TLB, the MMU first checks the TLB to see if the PTE is cached there. If so, the MMU uses the PTE to translate the virtual address into a physical address. If not, the MMU fetches the PTE from the cache/main memory and caches it in the TLB.

Process Address Space

- The index and tag fields are extracted from the virtual page number in the virtual address.

- If the TLB has \(T = 2^t\) sets, then the TLB index (TLBI) consists of the t least significant bits of the VPN, and the TLB tag (TLBT) consists of the remaining bits in the VPN.

- During the TLB lookup process, TBLIs are first used to quickly localize to a potential entry or set of entries. These entries are pre-assigned to specific TBLIs by means of hashing or direct mapping.

- After locating these entries, they are then compared one by one using the TLBT to confirm whether the found entries actually match the requested virtual address. A TLB hit is considered only if the TLBT also matches.

Calculate the Page Table Size

If we had a 32-bit address space, 4 KB pages, and a 4-Byte PTE

- Size of address space: 32-bit means total address space is \(2^{32}\), which is 4 GB

- Size of page: 4 KB means each page is \(2^{12}\), which is 4 KB

- Number of pages: \(2^{32}/2^{12} = 2^{20}\)

- Size of PTE: 4 Bytes

- Size of page table: \(2^{20} * 4 = 4 MB\)

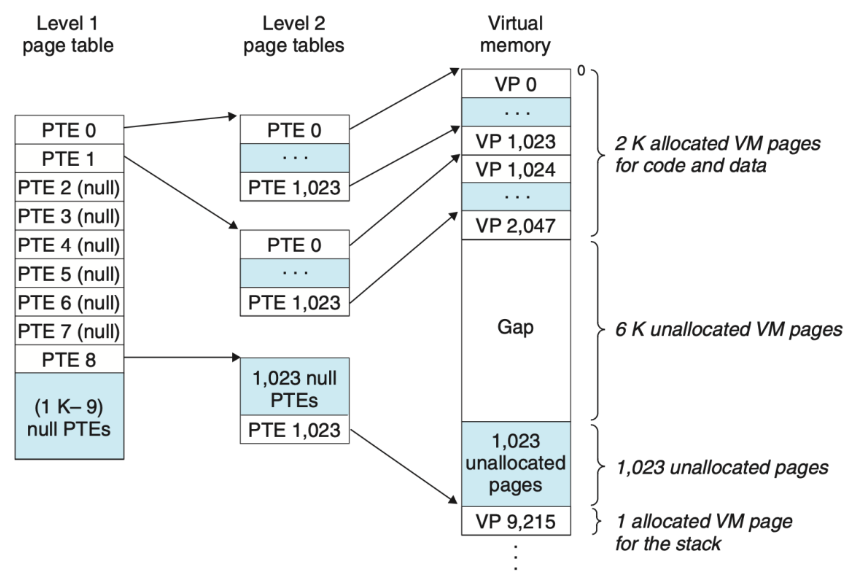

Multi-Level Page Table - Example

- Each PTE in the level 1 table is responsible for mapping a 4 MB chunk of the virtual address space.

Each chunk consists of 1,024 contiguous pages. e.g. PTE 0 maps the first chunk, PTE 1 the next chunk etc.

Given that the address space is 4 GB (2^32), 1,024 PTEs are sufficient to cover the entire space!

If every page in chunk i is unallocated, then level 1 PTE i is null. Each PTE in a level 2 page table is responsible for mapping a 4-KB page of virtual memory. Notice that with 4-byte PTEs, each level 1 and level 2 page table is 4 kilobytes, which conveniently is the same size as a page.

- If a PTE in the level 1 table is null, then the corresponding level 2 page table does not have to exist.

- Only the level 1 table needs to be in main memory at all times. The level 2 page tables can be created and paged in and out by the VM as needed.

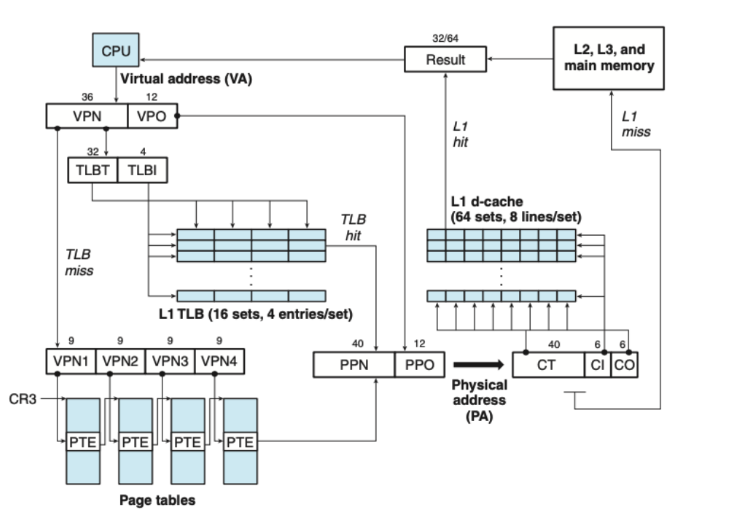

Intel Core i7/Linux Memory System

Jump to next Part3:page/page_table